“Think of SentraCoreAI™ like Aflac for AI and cybersecurity—we don’t replace your systems or tools, we make sure they work when it matters most.

SentraCoreAI™ is the governance drone—hovering above the digital battlefield, scanning for invisible threats, flagging behavioral drift, signaling danger before undetected strikes investments, business and Government doesn’t pay the price for their failures or it doesn't affect your reputation.”

Badges of Verified Trust

0. Why Now?

- 42% of AI projects abandoned in 2025 due to trust gaps — S&P Global

- EU AI Act enforcement begins Q4 2025 — compliance is mandatory

- April 2025: U.S. Executive Order launches national AI literacy initiative for high schools

- $1.035T+ updated TAM including AI-Education trust layer expansion

"We’re not in the age of AI — we’re in the age of accountability."

Trust isn’t a feature. It’s the firewall of the AI era. And right now? It’s failing — quietly, constantly, and catastrophically. AI systems are making billion-dollar decisions without oversight. They're hallucinating, drifting, leaking, and lying — and no one’s watching. SentraCoreAI™ was built for this exact moment: when regulation is behind, risk is exploding, and trust is the new attack surface. The next wave of AI lawsuits, breaches, and breakdowns won’t come from bad actors — they’ll come from trusted systems that were never truly audited. We audit the auditors. We audit the models. We close the trust loop.

New Use Case: 🎓 SentraEd™ - Education Trust Layer

Context: In April 2025, an Executive Order launched a national AI education rollout for high schools across the U.S. Yet no trust auditing layer exists.

- SentraCoreAI audits educational AIs for bias, hallucination, and misinformation

- Generates Trust Capsules for district and parent-facing transparency

- Applies same modules to EdTech vendors, tutoring bots, grading LLMs

- Can operate in FERPA-compliant & localized formats

“AI is now in the classroom. But who audits the teacher bots?”

New Use Case: Audit Capsules™ scale to survive agent swarm events1. The Problem

- Litigation against AI and autonomous systems is just beginning — but it will define the next decade.

- AI agents and cybersecurity platforms are already failing quietly, invisibly, and sometimes catastrophically.

- Investors, governments, and enterprises are onboarding systems they can’t fully verify — creating untraceable liabilities.

- Trust is assumed, not proven — and systems built on hallucinations, biased logic, or incomplete models are already shaping decisions with financial, legal, and political consequences.

- And every day, they get breached. Or they break. Or they lie — and no one knows until it’s too late.

- There is no trusted, autonomous layer auditing these risks in Real-Time.

- Regulations are coming — and most systems aren't ready.

“According to a March 2025 report from S&P Global Market Intelligence, 42% of businesses have abandoned most of their AI initiatives this year — a sharp rise from 17% in 2024. This finding comes from a survey of over 1,000 organizations across North America and Europe.

The primary reasons cited for these project failures include high costs, data privacy concerns, and security risks.

Additionally, the report notes that organizations scrapped 46% of AI proof-of-concepts before reaching production — a clear sign of the friction in scaling AI from pilot to deployment.”

2. The Solution

SentraCoreAI™ is the first and only system built to audit the age of autonomous decision-making. We created AAaaS™ — Autonomous Audit as a Service™ to continuously inspect, verify, and prove whether AI agents and cybersecurity systems are trustworthy — before litigation, breach, or public collapse ever happens. This isn’t monitoring. This is third-party autonomous verification — at machine speed. SentraCoreAI™ closes the blind spot by delivering:

- Behavioral audits for LLMs, agents, and autonomous decision logic — including hallucination detection, prompt drift, and bias traceability

- Cyber posture scoring of critical infrastructure (APIs, firewalls, zero-trust stacks, cloud integrations)

- Black Reports™ (private forensic trust audits)

- Legal risk classifiers mapping outputs to GDPR, HIPAA, AI Act, and evolving global regulations

- Zero-Knowledge Trust Capsules — cryptographically verifiable logs of trust, failure, and intent

- SentraScore™ trust ratings and SentraLoop™ re-audit feedback engine

Trust is no longer assumed. It’s audited, verified, and provable. SentraCoreAI™ doesn't just flag failures. It prevents the fallout. This is infrastructure — AAaaS™ is foundational tech for government, enterprise, and regulation.

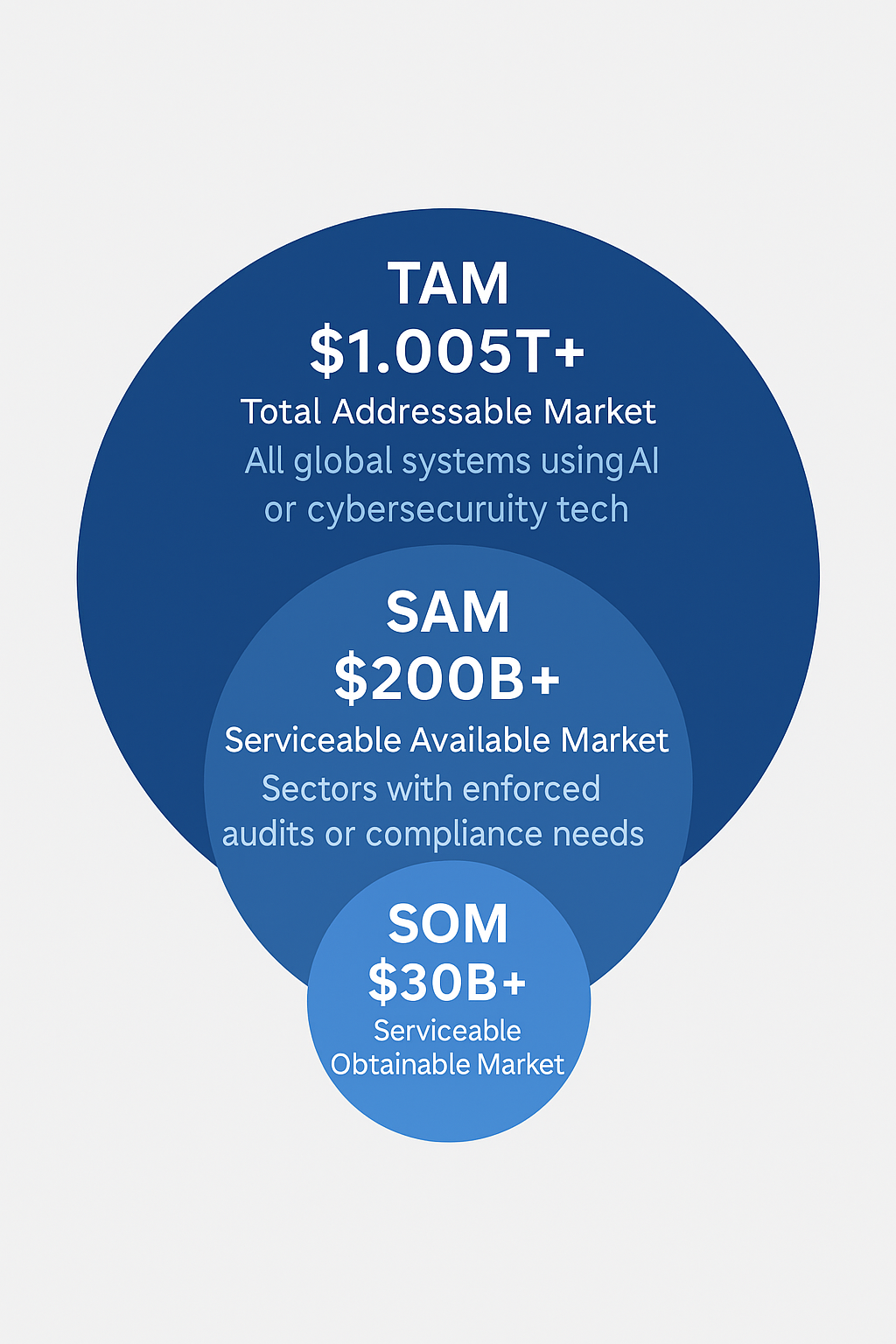

3. Market

Market Size Breakdown

| Sector | Market Size / Impact | SentraCoreAI Touchpoint |

|---|---|---|

| AI Adoption | $300B+ | Behavior, hallucination, drift audits |

| Cybersecurity | $250B+ | API, SIEM, and security stack audits |

| RegTech & Compliance | $130B+ | Legal risk classifiers, trust capsules |

| Education (EdTech + Public) | $30B+ | Audit AI tutors, teachers, curriculum AIs |

| Financial Services | $60B+ | Audit for hallucinated compliance & bias |

| Healthcare + MedTech | $40B+ | Trust capsules for AI diagnosis tools |

| Government Contractors | $75B+ | Mandated trust layer |

| Retail + Consumer Apps | $30B+ | Sentiment & misinformation tests |

| VC-Backed Startups (AI-native) | $120B+ | Trust score transparency in due diligence |

| Defense, Law Enforcement | Classified | Behavior assurance & drift testing |

→ Updated TAM: $1.035 Trillion+

- 🧠 AI Adoption ($300B+) — Enterprises and governments are deploying large language models and autonomous agents at scale — but with no independent trust layer.

- 🔐 Cybersecurity Spend ($250B+) — As zero-trust becomes table stakes, CISOs and tech buyers now seek validation of the tools themselves.

- 🏛️ Regtech & Compliance ($130B+) — Every upcoming AI law (EU AI Act, U.S. EO, Brazil LGPD) will require traceable, auditable behavior at model and system level.+

- 📈 Total Addressable Market: $680B+ — SentraCoreAI™ sits at the intersection of all three, defining a new category — AAaaS™ — as the required trust fabric for every autonomous system. Every system that makes decisions will require autonomous, external, provable oversight. That’s AAaaS™. And it’s not optional anymore.

4a. Financial Projections

- 2025: $3.2M ARR | 18 pilot clients | 4 Gov/Reg contracts

- 2026: $12.5M ARR | 50+ enterprise clients | 5 major platform integrations

- 2027: $34M ARR | 100+ clients | Full-scale compliance SaaS layering

Gross margin 83% | Trust-layer licensing & real-time audit subscription model

4b. Go-to-Market Strategy

Phase 1: Direct sales to compliance-focused enterprises (Fortune 500, Gov contractors)

Phase 2: RegTech integrations & compliance API ecosystem partnerships

Phase 3: Embedded trust badge program and Trust Capsule certification

- Currently onboarding 4 active gov & regulatory groups

- Partner pilots underway with 3 cybersecurity vendors

We Invented AAaaS™

Autonomous Audit as a Service™ — continuous, cryptographic, third-party trust validation for AI and cybersecurity systems. SentraCoreAI™ is defining this new category.

No humans in the loop. No excuses. Just verified trust.

• Continuous forensic scoring of AI & cyber infrastructure

• Behavioral drift detection, zero-knowledge fingerprinting

• Legal mapping + plugin-aware audit stack

5. What We Do

- 🧠 Audit AI systems (OpenAI, Claude, Gemini, Mistral, LLaMA) to detect hallucinations, manipulation, and drift in real time

- 🔐 Score cybersecurity stacks including APIs, firewalls, SIEMs, and zero-trust systems for trust posture and breach risk

- 📉 Detect trust failures across AI and cyber layers — including prompt injection, policy violations, and behavioral anomalies

- 📜 Publish Red Reports™ for public accountability, trust signaling, and external audit transparency

- 📁 Deliver Black Reports™ with forensic-grade evidence, legal mapping, and breach timeline reconstruction

- 🧬 Fingerprint model behavior to track drift, changes in tone, and unauthorized modifications over time

- 📎 Audit plugin and API integrations to surface hidden risks in model wrappers, external calls, and embedded behavior

- ⚖️ Map legal and regulatory exposure across GDPR, HIPAA, CCPA, the AI Act, and evolving global laws

- ⟳ Re-audit using SentraLoop™, an autonomous engine for continuous feedback and adaptive scoring

- 📂 Package evidence in Trust Capsules™, including cryptographic audit proofs, logs, and full forensic payloads

- 📚 Audit AI tools used in education environments — including hallucination checks, bias tagging, and curriculum-integrity scoring

- 🎓 Assist districts and governments with Trust Capsule™ exports for classroom AI deployments (Redacted + Parent-Facing Versions)

6. Product Overview

Every SentraCoreAI™ deployment installs a modular Trust Operating Layer — enabling real-time oversight across all system behaviors, inputs, and integrations.- 📊 SentraScore™ — Dynamic, modular trust scoring engine for AI, cybersecurity, and behavioral systems — cryptographically signed and transparent

- 🔁 SentraLoop™ — Real-time re-audit feedback loop that continuously tests, validates, and adjusts trust scores based on new outputs and behaviors

- 🤖 SentraBot™ — Autonomous evaluation of robotic systems, IoT devices, and embedded agents for manipulation, failure, and operational integrity

- 🔍 Plugin-Aware Audit Engine — Full-stack wrapper and integration analysis across APIs, AI agents, external calls, and connected platforms

- 🔒 Trust Capsule™ — Forensic-grade package of audit logs, hallucination chains, model fingerprints, and legal risk classifiers — sealed with zero-knowledge proofs

🔴 Red Report™

Public Audit Exposure

- 📢 Audits of public AI systems

- 🧠 Bias, hallucination & trust scores

- 📊 Trust badges + transparency scorecard

- 🔎 Behavioral snapshots & legal risk alerts

- 🌐 Shared with press, partners, and public

⚫ Black Report™

Confidential Audit Dossier

- 🔐 Private client-targeted audits

- ⚖️ Legal exposure + compliance mapping

- 📁 Includes raw logs + forensic annotations

- 🧩 Plugin-aware threat stack analysis

- 🕵️ Sent anonymously or under NDA

7. Traction

- ✅ Autonomous audits completed across GPT-4, Claude, Gemini, LLaMA, and Mistral — stress-testing hallucination, bias, and behavioral drift

- ✅ Red Reports™ 1–6 released, gaining attention from VCs, AI labs, and compliance professionals across LinkedIn, Substack, and enterprise forums

- ✅ Black Report #1 delivered — a full-scope forensic audit for a confidential enterprise client including trust fingerprint, hallucination chain, and legal mapping

- ✅ Podcast (The Trust Experiment™) launched — weekly episodes combining Red Report reveals, AI trust commentary, and guest insights from auditors, regulators, and insiders

- ✅ VC outreach and media traction underway — with multiple inbound requests from analysts, government tech offices, and enterprise compliance teams

- ✅ Government and private sector onboarding active, including agencies seeking zero-knowledge proof of AI safety, and vendors requiring trust badges for procurement

8. Business Model

- 📦 Enterprise AAaaS™ Subscription Starts at $7.5K/month Tiered based on: Audit volume (models/systems audited) Frequency (monthly, weekly, real-time) Jurisdiction complexity (U.S., EU, global) Includes basic access to Red Reports, SentraScore™, and dashboards

- 📄 Red & Black Reports™ (À la Carte) Red Reports™: $1,000–$2,500 Public-facing trust scans, ideal for AI startups and visibility Black Reports™: $10,000–$50,000+ Full forensic package, legal clause risk, fingerprint logs, hallucination chain, downloadable Trust Capsule — often used in enterprise due diligence or litigation protection

- 📊 Partner & White-Labeled Portals $25K–$100K/year For vendors, compliance teams, AI platforms that need recurring audits and client-facing trust reports

- 🏛️ SentraSecure™ (Gov Contracts)™ $500K–$3M+ based on scope National infrastructure auditing, pre-regulation readiness, model behavior fingerprinting for agencies

- 🛡️ SentraScore™ API & Trust Badge Licensing $5K/year for badge use (marketing/compliance) $10K–$50K/year for full API + badge combo (Especially valuable for AI vendors selling to enterprise/government — “certified by SentraCoreAI™”)

9. Competitive Advantage

SentraCoreAI™ isn’t just another audit tool. It’s the first platform to define and dominate the AAaaS™ category — Autonomous Audit as a Service. We’re not competing in someone else’s market. We created the trust layer for AI and cybersecurity. Key Differentiators:

- ✅ First mover in AAaaS™ category

- ✅ Dual audit layers: AI & Cybersecurity

- ✅ Public & private audit architecture

- ✅ Real-time behavioral re-audits

- ✅ Legal risk mapping + forensic outputs

- ✅ First to market with autonomous, full-stack trust auditing

| Feature | SentraCoreAI™ | Competitors |

|---|---|---|

| Autonomous AI audits | ✅ | ❌ |

| Cybersecurity trust scoring | ✅ | ❌ |

| Red & Black Report architecture | ✅ | ⚠️ |

| Legal risk classifier | ✅ | ❌ |

| Behavioral re-audit (SentraLoop™) | ✅ | ❌ |

| Re-audit feedback loop | ✅ | ❌ |

Others offer observability. SentraCoreAI™ offers verified accountability. We’re not watching the machine. We’re governing it.

Comparable Company Landscape

| Company | Annual Revenue | Monthly Pricing | Focus Area | Why It’s a Comp |

|---|---|---|---|---|

| Palantir | ~$2.9B | $500K–$10M+ | Big data analytics for gov + enterprise | Systems-level oversight at massive contract scale |

| Rapid7 | ~$844M | $5K–$20K+ | Cybersecurity, SIEM, compliance | Proves enterprise cyber tools demand high price |

| BigID | $100M+ | $10K–$25K+ | Data privacy, risk classification | Legal mapping + data trust insight |

| TrustArc | ~$75M | $3K–$12K | GDPR/privacy SaaS compliance | Entry-level comp for audit-driven compliance tools |

| Tala Security | <$5M | $1K–$8K | Web app/API security | Plugin-layer visibility, mirrors API audit logic |

SentraCoreAI™ Pricing Position

| Product | Projected Pricing | Value Delivered |

|---|---|---|

| AAaaS™ Platform | $7.5K–$25K+/month | Full-stack autonomous audit: AI, cyber, legal, drift |

| Red/Black Reports™ | $1K–$50K/report | From trust scans to forensic, litigation-ready audits |

| Trust Portals (White-label) | $25K–$100K/year | For vendors & compliance teams to visualize re-audits |

| SentraSecure™ Gov Contracts | $500K–$3M+ | Infrastructure auditing, regulatory reporting readiness |

| SentraScore™ API & Badging | $5K–$50K/year | Trust scores, badges, ZK audit proofs for resale/validation |

10. The Black Report™

Our flagship private forensic audit — including fingerprint trails, legal clause risk, and hallucination chains. Used by enterprises and governments to trace exposure before breach or litigation. Full behavioral evidence stack downloadable via Trust Capsule ZIP.

11. Vision

In 5 years, every AI and cybersecurity system will be SentraAudited™.

SentraCoreAI™ will be the ISO of trust. The AAaaS™ standard. The watchdog between society and machine logic. This isn’t just funding a platform. It’s launching the AAaaS™ category and scaling the Trust OS every AI and cybersecurity system will require. SentraCoreAI™ is becoming the Trust OS for AI and cybersecurity — the silent layer that governs, verifies, and protects machine decision-making at scale.

12. Team

- Frank Carrasco — Founder

- BSc Cybersecurity, MSc AI/ML, PhD InfoSec (in progress)

- 10+ years in security, regulation, and audit systems

- Host of The Trust Experiment™ podcast

- Juan Pablo Gonzalez — Data Engineer

- BSc Physics

- Completing MSc and PhD

12. The Ask

- 💸 Raising: $6.5M Seed+ Round (with flexibility up to $8M) To scale SentraCoreAI™ as the global Trust OS™ for AI and cybersecurity systems. Use of Funds:

- 🧠 30% Engineering + Audit Infrastructure — hiring for AI red-teaming, ZK protocol integration, plugin risk detection, and Trust Capsule delivery

- 🚀 20% Go-to-Market + Regulatory Partnerships — VC enablement, government onboarding, Red Report™ campaigns, and podcast/media expansion

- 📈 20% Enterprise Productization — dashboard deployments, Black Report ops, and API delivery tools for AI vendors and compliance teams

- ⚖️ 15% Legal, Compliance, and Cert Infrastructure — counsel, regulatory audits, ISO-equivalent certification, and hiring/salaries

- 💼 15% Sales and Marketing

6. Visual Architecture: SentraLoop

- Client connects via API/plugin

- SentraLoop initiates autonomous test suite

- Behavior & legal classifiers activate

- Trust Capsule generated → encrypted → submitted or stored

7. Trust Score Badge Spectrum

- Platinum: No violations detected, active capsule history

- Gold: Minor risks mitigated, no outstanding issues

- Silver: Risk flags with resolutions pending

- Uncertified: Failed trust audits, active compliance risk